Ryan Mark and I built enviroVOTE to help people visualize the environmental impact of the 2008 elections. We designed it in two evenings and made it real in a three-and-a-half-day long bender of data crunching and code.

This is the story of that time.

+ coffee =

+ coffee =

Sunday evening, 26 October: the concept

The idea struck us when Ryan and I discovered we had a common problem: homework. Ryan was on the hook to produce a story about the environment for News 21‘s election night coverage, and I needed to build an example presenting news data in some interesting way using charts and graphs. So we decided to combine our efforts and make something that would visualize environmental information about the election.

We searched for data to present, and found that it came in many shapes; like a candidate’s track record of support on environmental issues, or statistics on national parks, nuclear power and everything in-between. But the most compelling data set we found was not stats- or issue-based: endorsements made by environmental groups.

Statistics were cut because they’re only peripherally related to the races being run. It’s not particularly interesting to say something like “in states with more than five hydroelectric power sources, the democratic candidate prevailed 18% of the time.”

Only sportscasters can get away with that crap.

Why not issues, then? They’re hard to quantify. Candidate websites are frequently slippery, ambiguous things, and we found that few politicians responded to efforts that would make their positions crystal clear like Project Vote Smart’s Political Courage Test and Candid Answers’ Voters Guide to the Environment. The best data we could find were candidates’ voting records, but without understanding the nuance of each piece of legislation, it’s nearly impossible to determine if a vote was for or against the goodness of the earth. (Also, only incumbents have voting records.)

An endorsement is a true-false, unambiguous, easy to count thing. Environmental groups like the Sierra Club and the League of Conservation Voters publish their support for candidates online. Even better, the aforementioned Project Vote Smart — a volunteer group dedicated to strengthening our democracy through access to information — aggregates endorsements, and makes them readily available for current and historic races. And Vote Smart makes them available via an API, so others can mash up their data, just like we were itching to do.

Wednesday evening, 29 October: the design

A second fury of inspiration led to the design of the site. Marcel Pacatte, my instructor and head of Medill’s Chicago newsroom, was our source of journalistic wisdom. He and I identified our audience and discussed the angles and presentation methods that would best serve them. Obvious ideas like red/blue states and a map of the nation’s greenness were tossed — maps aren’t all that good at showing off numbers. (Notable exceptions include cartograms and the famous diagram of Napoleon’s march to Moscow, neither of which seemed sensible metaphors to adopt.)

Scope creep, be damned!

We decided to not make a voter’s guide, since there was little time before the election for folks to find the site, and to instead make something that’s interesting the day of the elections, and useful in the days following. So we looked for numbers to support that mission.

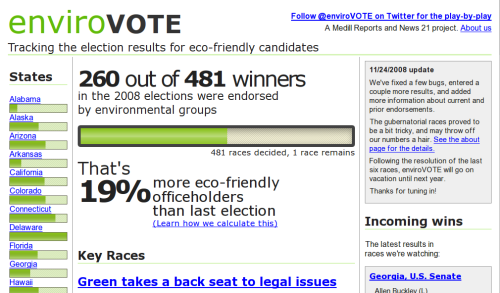

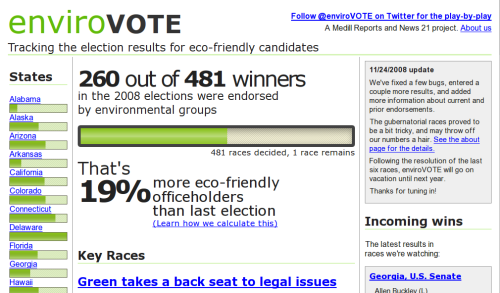

Counting environmentally-friendly victories would be both timely on election night, and purposeful later. We could calculate a win for the earth by counting endorsements: if the winning candidate had more endorsements, it was a green race. This was easy to aggregate nationally as well as by state.

And by running the same numbers on the previous races (two years ago for the House, six for the Senate, etc.) we could calculate the change in the environmental-friendliness of the nation’s elected officials, a figure that became known as “environmintiness.”

In addition, some races potentially held more impact for the environment than others — because of their location or the candidates running — so we decided it was necessary to highlight these key races alongside the numbers.

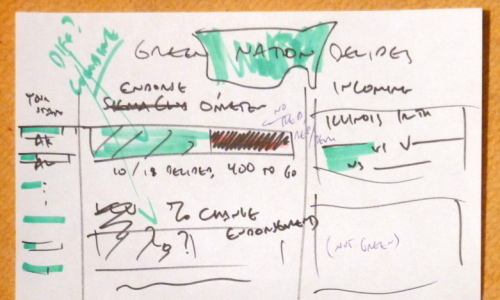

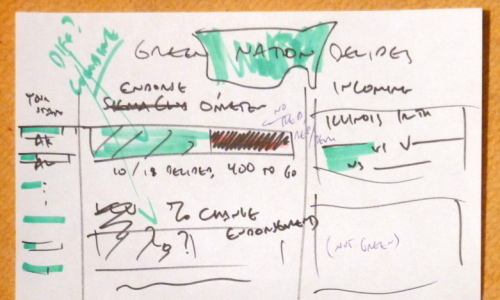

The sketch that served as the primary design document for enviroVOTE

In a whirlwind sketch-a-thon, the design for the site flew together. We would show off the two big numbers in the simplest possible way. No maps, pies or (praise the lord!) Flash necessary. They’re both just percentages. To set off one from the other, we decided on a percentage for the percent change, and a one-bar chart for the victory counts, in aggregate and for individual states.

Users would be interested in seeing results from their home state, so we made the states our primary navigation, and listed them, along with their bar chart, down the left side of the page. (We explicitly decided to not use a map for navigation, like most sites do. If I lived in Rhode Island, I’d effing hate those sites.)

Putting the big numbers front and center and listing the incoming race results down the right gave users an up-to-the-minute snapshot of the evening. The writeups about key races, though important, were our least timely information, so we made them big and bold, but placed them mostly below the fold.

We produced a simple design, just three pages — home, a state and a race — each presenting more detail as you drilled down.

Saturday and Sunday morning, 1-2 November: the development

Development began Saturday morning. We decided to build the site on Django, the free and open source web development framework that we were concurrently using to build News Mixer, the big final project of our master’s degree program. (If you’re interested in our reasons why, and how it all works, check out my post that explains the same stuff re: News Mixer.)

We brainstormed names for our new baby, and immediately checked to see if the urls were available. envirovote.us was the first one we really liked, so we bought it and started running. Ryan designed a logo and whipped up a color scheme, and thus a brand was born.

Improvising the details, we built the site very closely to as it was designed. (The initial sketches were mine, but Ryan gets the props for making it look so damn sexy.) Coding the site took about a day and a half, minus time for Ryan to go home and sleep, and for me to cook soup.

We used the awesome, free tools at Google Code to list tasks and ideas, manage our source code, and track defects. The simple concept and excellent tools helped make this a relatively issue-free development cycle. Django, FTW!

Sunday afternoon and Monday, 2-3 November: the gathering, massaging, and jamming in of data

Pretty much finished with the code, minus subsequent bug fixes and tweaks, we started on the data.

Ryan used the Project Vote Smart API to gather information on current and historical races: the states, districts, and candidates that form the backbone of our system. He wrote Python scripts to repeatedly call the API, munge the response, and aggregate all of the races, candidates, wacky political parties, and the rest into files we could then pump into the database.

I attacked from the other side and scoured environmental groups’ websites, as well as the endorsement data provided by Project Vote Smart, to collect the endorsements we use to calculate the big numbers.

Once all the data was collected into text files, we then wrote more scripts to read those files, scrub the data of inconsistencies, poor spelling, and other weirdness, and finally fill the database.

All of this took a day and a half, far longer than we had hoped, and as much time as was necessary to build the website. I did not cook soup. We ordered in.

Coffee, nerd sweat… smells like software. Yet, curiously minty-fresh.

Tuesday, 4 November

After attending class all day in Evanston, Ryan and I headed downtown for an evening of data input and cursing at screens.

Julia Dilday and Alexander Reed watched the AP wire all night, tracking races and gathering results and entering them into the system. I cannot express how much more difficult this was than we anticipated. Julia and Alex: thank you thank you thank you thank you.

Ryan kept the system humming through the night. He tamed the beast: keeping the site online, fixing bugs, and updating the administrative interface in an effort to improve the poor working conditions of Julia and Alex.

I ran the public relations effort: taking interviews, helping input incoming races, and getting the word out about our little project. I also gave enviroVOTE a voice. We set up a Twitter account to tell the nation about environmintiness as the results came in. (For a time, the site automatically twittered with each race result, until we realized that it was sending far more tweets than anyone would ever want to read, and turned it off.)

The aftermath

I’m told the presidential race was noteworthy, though I can’t recall who won — it was just one of nearly 500 races we recorded that night, and we weren’t watching the TV.

Since the 2nd, we’ve fixed a few bugs and we’ve slowly added the final race results as they’ve trickled in. The site is not nearly as dynamic as is was election night, but maybe we’ll have another few days free next year.